Advanced RAG Techniques: A Deep Dive into the BAiSICS Document Analysis Platform

Reuben McQueen

Explore how BAiSICS leverages advanced Retrieval Augmented Generation (RAG) techniques, knowledge graphs, and hybrid semantic search to revolutionize document processing for SMEs. Learn about its multi-stage retrieval pipeline, transparent sourcing, and enterprise-grade security features.

Advanced RAG Techniques: A Deep Dive into the BAiSICS Document Analysis Platform

Introduction

As small-to-medium enterprises (SMEs) start to incorporate Generative AI into their daily operations, many find that conventional, generic solutions struggle to meet their specialised requirements. Even state-of-the-art large language models (LLMs) and popular AI-driven contract analysis tools can falter when it comes to scaling, accuracy, or handling domain-specific complexity. Against this backdrop, BAiSICS emerges as a cutting-edge platform that leverages advanced Retrieval Augmented Generation (RAG) techniques to revolutionise document processing and legal contract analysis. Focused on a simple, business focused user interface, doing one thing, and doing it well.

In this technical deep dive, we will explore how BAiSICS employs knowledge graphs, hybrid semantic search, and sophisticated re-ranking strategies to achieve exceptional speed, accuracy, and cost-efficiency. We will also examine its architectural underpinnings, from OCR technology and handwriting recognition to ISO-compliant security measures, as well as the platform’s focus on transparent sourcing, where highlighted document segments let users verify answers instantly, ensuring maximum trust and clarity.

The Need for Advanced Retrieval Augmented Generation

SMEs often require AI systems capable of parsing vast, unstructured document sets—ranging from multi-hundred-page leases and intricate insurance policies to complex interconnected clauses in legal contracts. Basic LLM prompting or simple vector search-based approaches tend to lack the precision and reliability needed for domain-specific tasks.

RAG pipelines resolve this gap by augmenting generative models with highly relevant contextual data. Rather than relying on a single, monolithic model, BAiSICS orchestrates multiple retrieval steps, ensuring only the most pertinent, evidence-based information guides the final output. This approach optimises accuracy, speed, and cost-efficiency, whilst aligning closely with the user’s industry and compliance requirements.

Key Innovations: Multi-Stage Retrieval & Knowledge Graph Integration

Multi-Stage Retrieval Pipelines

BAiSICS employs a multi-stage RAG pipeline that synthesises traditional keyword-based methods (such as BM25) with semantic vector embeddings and graph databases. Each iterative retrieval phase refines candidate text segments before presenting them to the LLM. By layering retrieval strategies, BAiSICS selects only contextually rich, domain-relevant excerpts, substantially boosting the quality and relevance of generated responses.

Domain-Specific Knowledge Graphs

At the heart of BAiSICS lie its custom knowledge graphs, encoding intricate relationships between legal concepts, contractual clauses, and compliance frameworks. When a user requests, for example, “Find the renewal clauses in these leases”, the knowledge graph identifies relevant passages—even without a direct textual match. This semantic capability ensures that retrieval is nuanced and logically sound, rather than merely keyword-based.

Hybrid Semantic Search & Re-Ranking

The platform combines semantic search with metadata-driven filtering to deliver unmatched precision. A query like “force majeure clauses in German contracts from Q1 2024” benefits from both conceptual embeddings (for thematic alignment) and metadata (for language and date filtering). Post-retrieval, a re-ranking model—finely tuned on business and legal documents—scores the top candidates. The outcome is that the final LLM prompt is as contextually relevant and authoritative as possible.

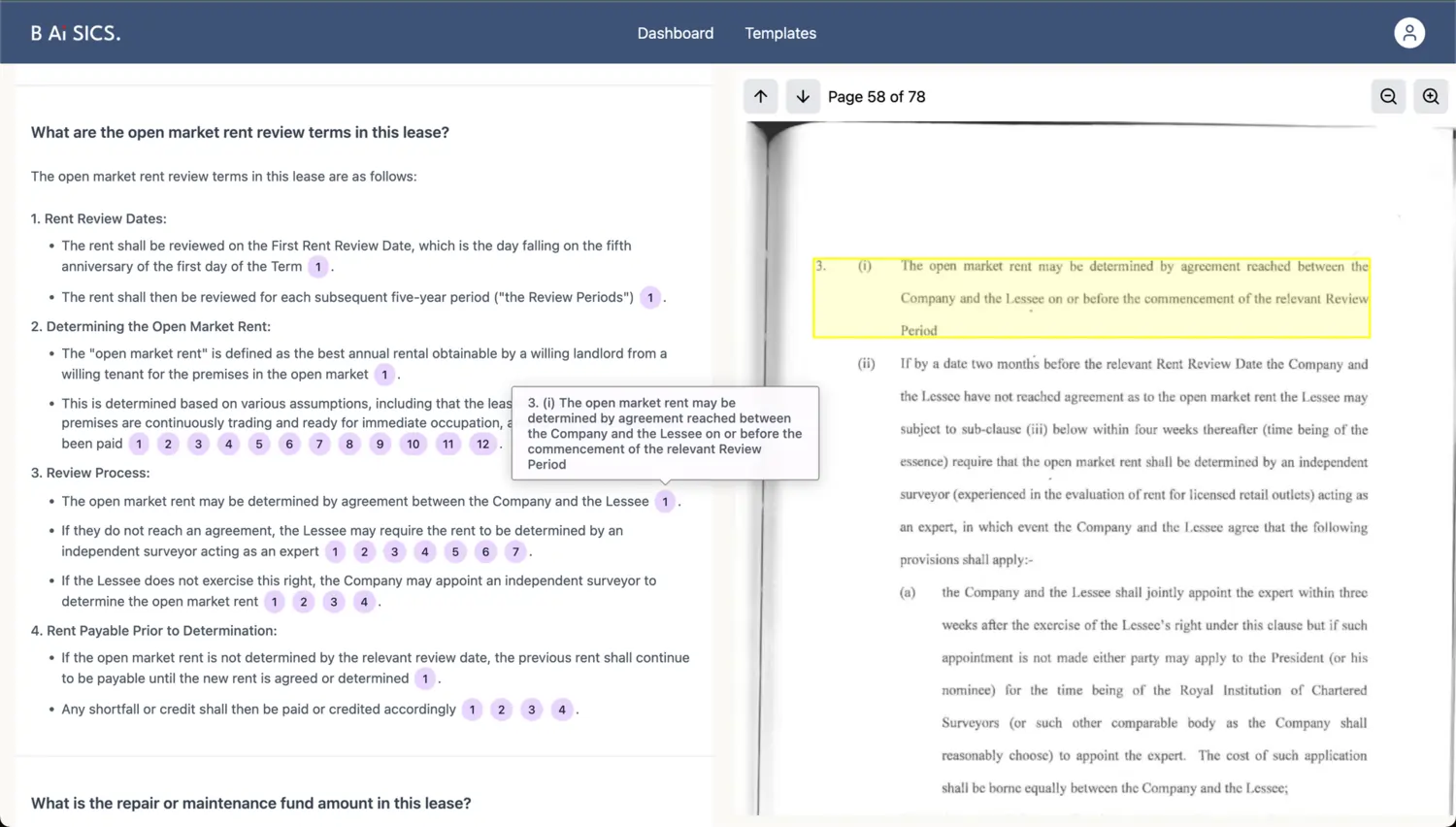

Sourcing, Transparency, and Highlighted Verification

In sectors such as law and finance, trust and verifiability are paramount. Users need to confirm the origins of extracted information and ensure no critical detail is lost in interpretation. BAiSICS addresses this by integrating source highlighting directly into its user interface. When the system produces answers—such as key clauses, expiry dates, or compliance notes—it simultaneously displays excerpts from the original document, with the relevant portions highlighted.

This transparent sourcing enables instant verification: users can see exactly where the AI derived its insights, eliminating ambiguity and fostering trust. Such a feature is invaluable for auditing, compliance checks, and providing legal professionals with the reassurance that the analysis genuinely reflects their source materials.

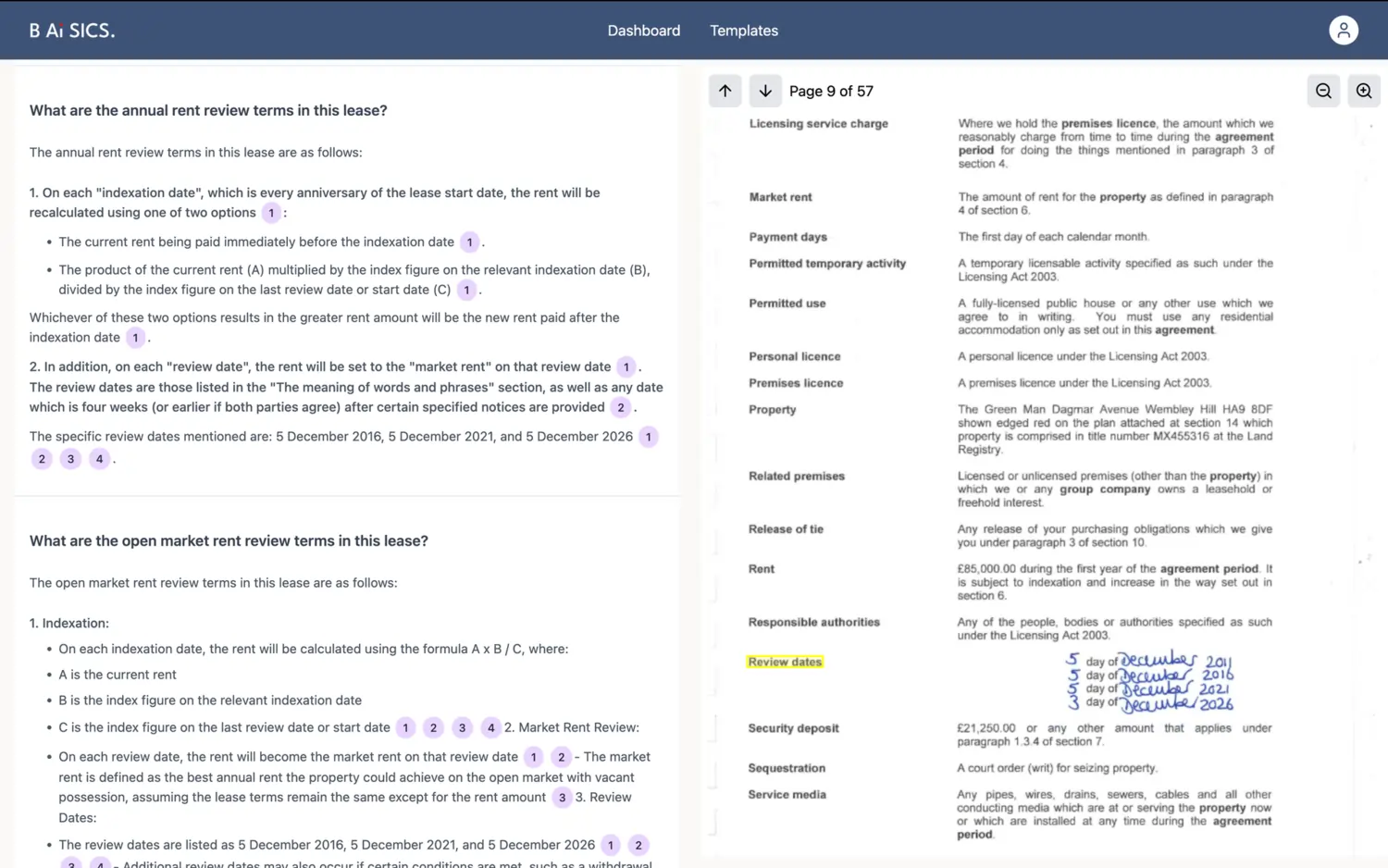

Beyond Context: Handling Poor-Quality Documents & Handwriting Recognition

Real-world business documents are often messy—poorly scanned, handwritten, or incomplete. BAiSICS overcomes these obstacles by integrating state-of-the-art OCR and handwriting recognition to clean, normalise, and structure incoming data. This preprocessing ensures the downstream retrieval pipeline and LLM can work with accurate, high-fidelity text.

Additionally, BAiSICS supports over ten languages, allowing global SMEs to navigate complex, multilingual contract portfolios. By pairing multilingual OCR with metadata-based retrieval, users can seamlessly extract insights from French leases, German vendor agreements, or Spanish insurance policies, all with the same efficiency and clarity.

Ensuring Speed and Stability at Scale: Bespoke Queueing & Infrastructure

Initially, BAiSICS required around 30 minutes to process a 200-page legal document. Through targeted refinements—optimising the RAG pipeline, implementing caching strategies, and introducing a bespoke queueing system to manage LLM rate limits—the platform now completes the same task in only 10 minutes.

These architectural improvements not only outpace competing tools and generic GPT-4-based solutions but also cut operational costs by 60% compared to initial projections. Backed by cloud-native orchestration, vector databases, and graph storage, BAiSICS scales effortlessly as SMEs’ document repositories grow, ensuring stable performance and predictable expenses.

Security, Compliance, and Enterprise-Grade Reliability

Handling sensitive legal documents demands robust security. BAiSICS adheres to ISO 9001 and ISO 27001 standards, employing strict access controls, data encryption, and thorough audit logging. These measures reassure clients that their proprietary data remains protected, confidential, and compliant with industry regulations.

Moreover, BAiSICS’s CI/CD pipelines and continuous feedback loops ensure steady improvements without sacrificing stability. New features, bug fixes, and enhancements roll out smoothly, supported by automated testing and user-led refinement.

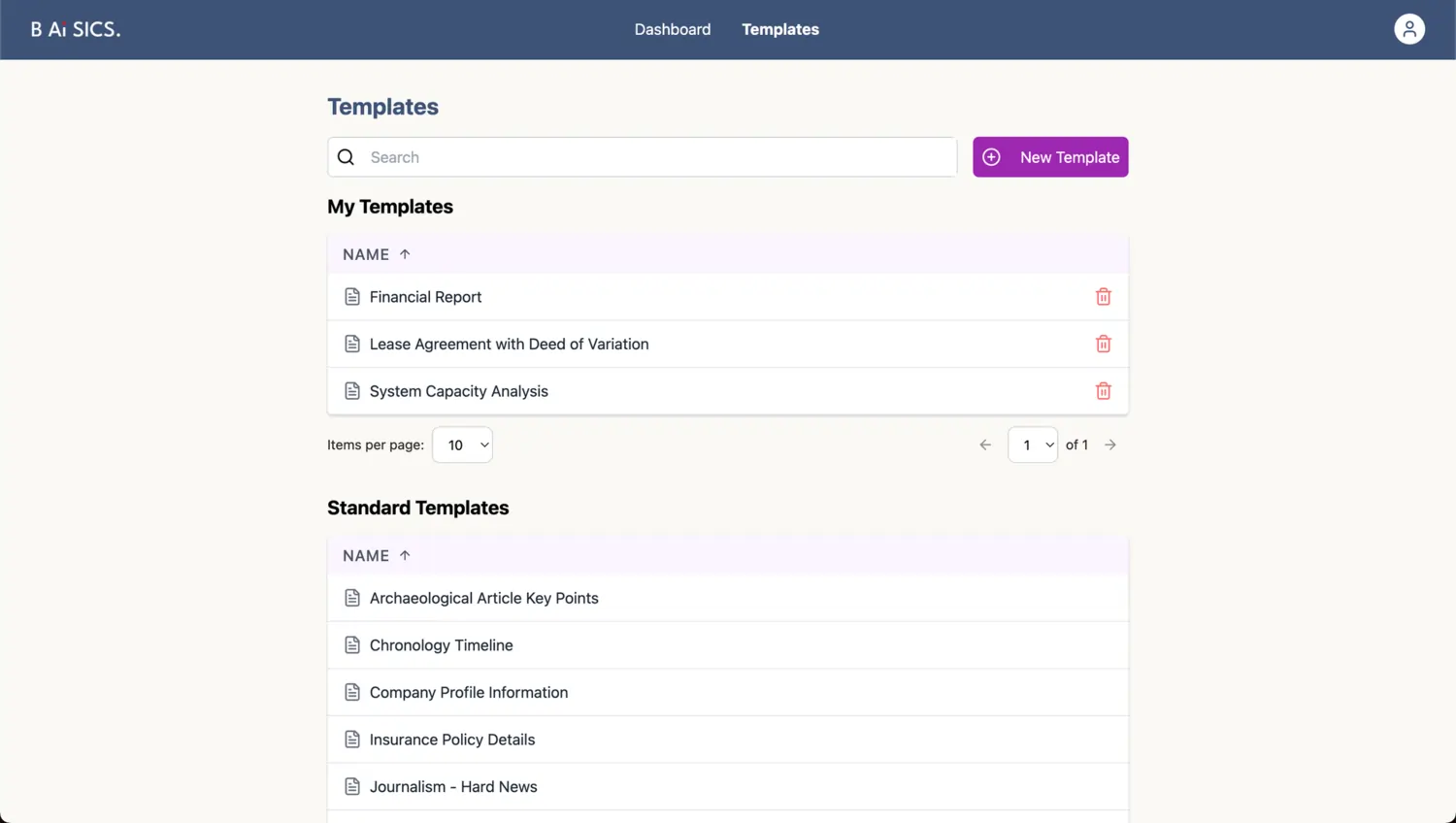

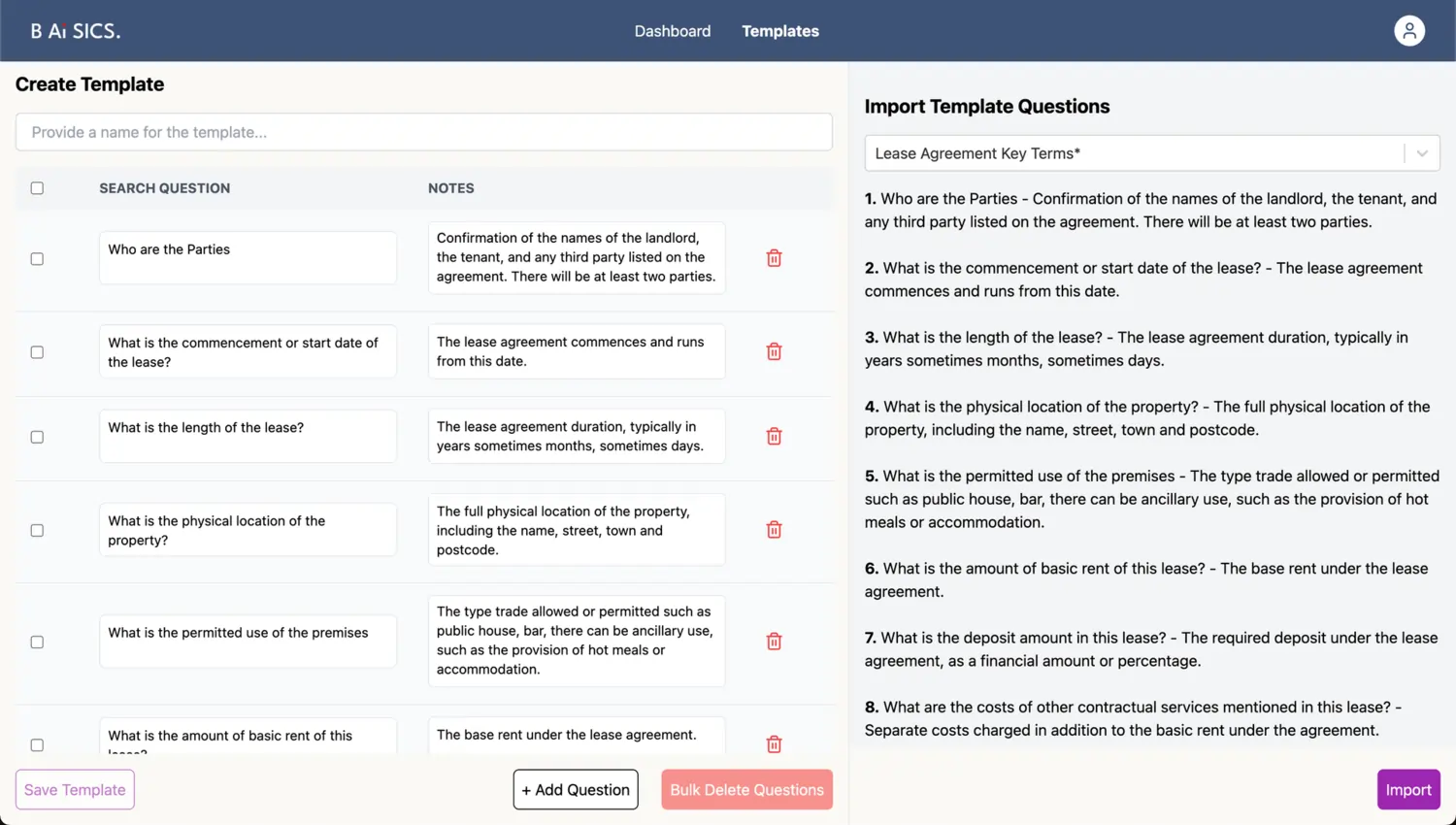

Domain-Specific Templates: Zero-Prompting Interfaces

One common barrier to harnessing AI’s full potential is the complexity of prompt engineering. BAiSICS eliminates this challenge through template-based systems, which standardise frequently performed tasks like extracting financial terms, key dates, or specific clause types. Users select a pre-defined template—no intricate prompt crafting required. This zero-prompting approach empowers SMEs to adopt advanced AI capabilities immediately, delivering rapid gains in productivity and user satisfaction.

Results & Impact: Surpassing Frontier AI Solutions

Comparative benchmarks show BAiSICS’s customised RAG pipeline outperforms generalist models, including GPT-4 derivatives and other legal AI platforms, in both accuracy and processing speed. Clients report a 300% reduction in analysis time and significantly improved document comprehension.

Law firm Morgan and Clarke stated:

“BAiSICS has revolutionised our approach, dramatically accelerating the review process for commercial leases and other property documents. We now rely on it for nearly every client instruction. The platform is straightforward, user-friendly, and highly effective. It’s an excellent product that excels at what it does.”

Equally important, users appreciate the transparency and verifiability offered by source highlighting, which facilitates swift validation and strengthens trust in the system’s outputs.

Future Directions: Expanding the Frontiers of Retrieval Augmented Generation

As demand for domain-specific, high-precision AI solutions grows, BAiSICS serves as a template for moving beyond generic capabilities. Its roadmap includes deeper practice management integrations, more advanced metadata filtering, and expansion into additional verticals—such as insurance and real estate—each posing unique challenges in document analysis.

Ongoing R&D will refine hybrid retrieval, enhance semantic embeddings, and explore on-device LLM caching for secure or offline scenarios. As more SMEs witness the tangible benefits of this tailored RAG methodology, BAiSICS remains well-positioned to remain at the forefront of intelligent document processing, RAG-based legal technology, and enterprise-grade AI solutions.

If you would like to read more about BAiSICS, check out our case study here.

Conclusion

Transform your business with advanced AI agents and automation solutions!

For organisations looking to enhance their operations and drive innovation, visit OpenKit OpenKit today.